I've been doing SEO long enough to know that when something grows too fast, you should pay attention. Not because you want to copy it — but because the crash that follows usually teaches you more than the growth ever did.

Grokipedia is that crash.

In October 2025, Elon Musk's xAI launched an AI-generated encyclopedia with 885,279 articles. Two months later, it was pulling 3.2 million clicks a month from Google. By February 2026, the whole thing collapsed — across Google, AI Overviews, and ChatGPT. Simultaneously.

This isn't a story about a bad website. Grokipedia actually did a lot of things right from a technical SEO standpoint. That's what makes it such a useful case study. It shows you exactly where the line is between "Google will index this" and "Google will trust this."

Here's the full timeline. Every phase. Every data point. And what it means if you're publishing content at scale in 2026.

How It Started: 885,000 Pages, Day One

Grokipedia went live on October 27, 2025, tagged as version 0.1.

The concept was simple enough: build an alternative to Wikipedia, but instead of volunteer editors, use xAI's Grok language model to generate and fact-check every article. Users could suggest corrections through a pop-up form, but couldn't directly edit anything. Grok reviewed the suggestions.

Think about that for a second. Wikipedia took over two decades to build 6.8 million English articles with millions of human contributors. Grokipedia launched with 885,279 articles on day one. All generated by a single AI model.

Here's what the launch looked like:

- 885,279 articles live from the start

- 8 languages — English, Spanish, German, French, Portuguese, Chinese, Russian, Japanese

- Clean URL structure — every article lived at

grokipedia.com/page/Article_Title - Built on Next.js with server-side rendering (important later)

- Automated internal linking based on semantic relationships between topics

- Legal pages all linked to x.ai, Musk's AI parent company

The first-day traffic spike was big — 460,400 visits on October 28. But it was mostly curiosity. By day three, traffic had dropped 70%. Within two weeks, daily visits settled into a range of 30,000 to 50,000. The novelty had worn off.

Nobody was talking about Grokipedia's organic search performance yet. Because there was almost nothing to talk about.

That was about to change.

Phase 1: The Indexing Sprint (October 28 – November 5)

This is where it starts getting interesting from an SEO perspective.

Both Google and Bing started crawling Grokipedia within 24 hours of launch. Here's how the indexing played out:

| Date | Google Indexed | Bing Indexed |

|---|---|---|

| Oct 28 (Day 2) | 26 pages | 26 pages |

| Nov 3 (Day 8) | ~478 pages | ~33,000 pages |

| Nov 5 (Day 10) | ~1,400 pages | ~52,000 pages |

Bing was aggressive — 52,000 pages indexed in ten days. Google was slower and more deliberate, but still: 1,400 AI-generated pages indexed in under two weeks. For a brand-new domain. Running entirely on AI content.

This raised eyebrows across the industry. Martin Jeffrey, an SEO consultant, posted on LinkedIn:

"Grokipedia just launched with 900,000 AI-generated pages, and they're already showing up in Google. Google has been absolutely hammering sites for 'scaled content abuse' this year."

He was right to flag the double standard. Throughout 2024 and 2025, Google had been aggressively penalising sites that used AI to mass-produce content. The March 2024 core update specifically targeted "scaled content abuse." Small publishers were losing their entire sites over it.

And here was Grokipedia — nearly a million AI-generated pages — getting indexed without friction.

If you've ever had indexing issues with your own site, you know how frustrating that double standard feels.

Phase 2: The Organic Explosion (November 2025 – January 2026)

Now we get to the numbers that made everyone pay attention.

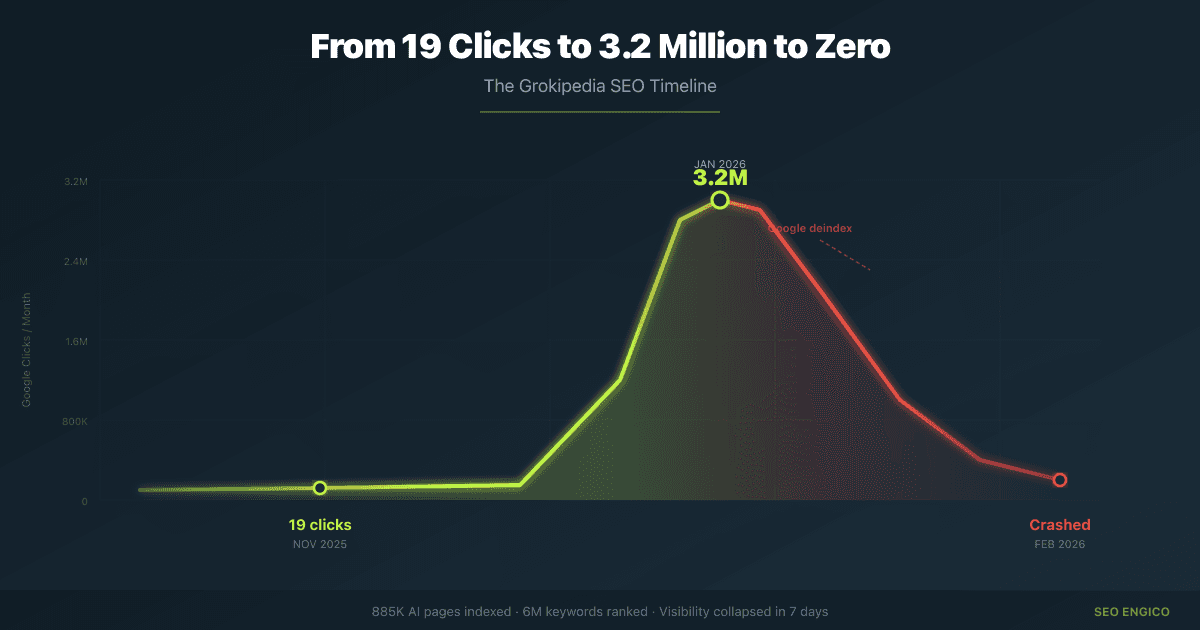

In November 2025, Grokipedia received 19 clicks from Google Search for the entire month. Nineteen. The site was essentially invisible in organic results.

By January 2026, that number was 3.2 million clicks per month.

Read that again. From 19 to 3,200,000. In roughly 60 days.

The site was ranking for 6 million keywords. Google had indexed over 900,000 pages. And the article count had ballooned from the original 885,000 to over 6 million — approaching 79% of Wikipedia's entire English-language corpus.

So what happened? How does a two-month-old domain go from invisible to millions of clicks that fast?

The Technical Foundation Was Genuinely Good

I'll give credit where it's due. Grokipedia's technical SEO was solid.

The site was built on Next.js with full server-side rendering. That means every page served complete, pre-rendered HTML to search engine crawlers. No client-side JavaScript rendering required. No "render budget" concerns. Google's crawlers could read every page immediately, exactly as a user would see it.

The URL structure was clean and logical: grokipedia.com/page/Article_Title. No query parameters, no hash fragments, no unnecessary nesting. Every URL was descriptive and crawlable.

And then there was the internal linking.

Every article was automatically cross-linked to semantically related topics. If you were reading about machine learning, the page linked to articles on neural networks, deep learning, supervised learning, and dozens of related concepts. The result was a dense, interconnected web of pages — exactly the kind of site architecture that search engines reward.

If you stripped the Grokipedia logo off the site and showed Google the crawl data, it would look structurally identical to Wikipedia. And Google's algorithms are deeply familiar with Wikipedia's patterns. They've been using Wikipedia as a knowledge source for years.

Grokipedia essentially said: "We're going to look exactly like something you already trust." And for a while, it worked.

The Wikipedia Playbook, Executed at Machine Speed

Let's be specific about what Grokipedia borrowed from Wikipedia's architecture:

- Hierarchical categories — articles organised into nested topic taxonomies

- Consistent page templates — same layout, same structure, every single article

- Dense cross-linking — every article linked to dozens of related articles

- Clear information hierarchy — introduction, sections, subsections, references

- Unified tone — consistent voice and depth across all content

Wikipedia's quality varies wildly between articles. Some are 200-word stubs. Others are 15,000-word featured articles. Grokipedia didn't have that inconsistency. Every article hit a consistent baseline of length, structure, and depth.

From a machine evaluation standpoint — which is how Google initially processes sites — this uniformity actually helped. The algorithms saw a massive, well-structured, consistently formatted encyclopedia. It looked authoritative.

The Domain Authority Factor

Here's something most analyses missed. Grokipedia's legal pages — Terms of Service, Privacy Policy, Acceptable Use Policy — all linked directly to x.ai. That's Elon Musk's AI company.

In the world of E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness), association with a known, well-funded entity sends trust signals. Google's systems don't just evaluate the content on the page. They evaluate who's behind it, what entity is associated with it, and whether that entity has a track record.

xAI had both brand recognition and financial backing. That likely gave Grokipedia an initial trust boost that an anonymous AI encyclopedia wouldn't have received.

The Traffic Picture at Peak

Here's where Grokipedia stood at its highest point:

| Metric | Value |

|---|---|

| Google clicks per month | 3.2 million (Jan 2026) |

| Total monthly visits | 8.65 million (Nov 2025) |

| Organic keywords ranking | 6 million |

| Indexed pages in Google | 900,000+ |

| Total articles | 6+ million |

| Daily active users | ~35,000 |

| Monthly active users | ~1 million |

| Top market | United States (14.74%) |

| Mobile traffic share | 63% |

| Languages supported | 8 |

For context: Wikipedia received 3.4 billion visits in November 2025. Grokipedia's 8.65 million was a fraction of that. But for a domain that was two months old, running entirely on AI-generated content, with no editorial team, no contributor community, and no established backlink profile — this was uncharted territory.

Nothing at this scale had ever grown this fast in organic search.

Phase 3: The Cracks Nobody Wanted to Talk About

While the traffic charts looked incredible, there were serious problems underneath. The kind of problems that don't show up in Google Search Console but absolutely show up in Google's quality evaluation systems.

The Citation Problem

An independent analysis of Grokipedia's content found three alarming patterns:

- 12,522 citations to sources rated as low-credibility

- 1,050 self-referential citations — Grokipedia literally citing itself as a source

- 5.5% of all articles cited sources that Wikipedia has explicitly banned

Think about what this means from a quality standpoint.

If you're building an encyclopedia — a resource that exists to provide accurate, trustworthy information — and your AI is pulling citations from unreliable sources, you've got a fundamental problem. And if your AI is citing itself as evidence? That's circular reasoning baked into the content at scale.

Google's quality raters are specifically trained to evaluate source credibility. It's a core part of the E-E-A-T framework. When your content cites banned and low-quality sources, that's not a minor issue. It's a signal that gets flagged.

More Words, Fewer Sources

A comparative analysis of matched article pairs between Grokipedia and Wikipedia found something telling: Grokipedia's articles were substantially longer, but less densely referenced.

More words. Fewer sources.

The AI was generating exposition — long, readable explanations — rather than source-backed factual claims. It reads well on the surface. But when a quality evaluator checks the references, there's not much there. The content feels authoritative without actually being substantiated.

This is a trap a lot of AI-generated content falls into. The writing sounds confident and knowledgeable. But confidence without evidence is just... noise. And Google's systems are getting better at distinguishing between the two.

Who Wrote This? Nobody.

Here's the core E-E-A-T problem that Grokipedia couldn't solve.

Google's quality guidelines ask: who created this content? What qualifies them to create it? What experience do they bring?

For Grokipedia, the answer to all three questions was: a language model.

No bylines. No editorial board. No subject-matter experts. No peer review process. No "about the author" information. Every article — whether it covered quantum physics, childhood diseases, legal precedents, or historical events — was generated by the same AI.

For everyday, low-stakes topics, this might not matter much. But Grokipedia covered everything. Including medical conditions, legal advice, financial information, and scientific claims. Google classifies these as YMYL topics (Your Money or Your Life) and holds them to the highest quality standard.

AI-generated medical content with no expert oversight is exactly the kind of thing E-E-A-T was designed to catch.

The PolitiFact Review

In November 2025, PolitiFact fact-checked a sample of Grokipedia articles. Their finding: content that differed from Wikipedia included "unsourced content and misleading or opinionated claims."

Some articles were found to promote conspiracy theories and claims that contradict scientific consensus. Not fringe, edge-case articles buried deep in the site. Discoverable content that users and search engines could surface.

This wasn't just a content quality problem. It was a trust problem. And trust, once questioned at scale, is nearly impossible to rebuild.

Phase 4: The Crash (February 6, 2026)

On February 6, 2026, Glenn Gabe — an SEO consultant who's been tracking algorithm updates for over a decade — flagged something on social media.

Grokipedia's Google visibility had fallen off a cliff.

His data from both Sistrix and Semrush confirmed it. After months of steady growth and a massive ranking peak in January, the trajectory had reversed. Not gradually. Not slowly. The decline was steep, fast, and unmistakable.

Malte Landwehr from Peec.AI independently verified the same pattern — and added a detail that made the situation even more significant.

Grokipedia wasn't just losing Google organic rankings. It was losing visibility across:

- Google organic search — traditional blue link rankings

- Google AI Overviews — the AI-generated summaries at the top of search results

- Google AI Mode — the conversational search experience

- ChatGPT — where Grokipedia had previously been cited as a source

All of them. At the same time.

This is an important detail. If you've been thinking about AI and LLM visibility as a separate channel from Google organic, this event suggests they're more connected than we thought. Quality signals appear to be shared across platforms. When one system decides your content isn't trustworthy, the others follow.

By February 10, Wikipedia was ranking above Grokipedia for the search query "Grokipedia." Let that sink in. Google ranked a competitor above Grokipedia for its own brand name.

That's about as definitive a signal as Google sends.

What Triggered the Decline?

Nobody outside Google knows the exact algorithmic cause. But the SEO community — including analysts who track core updates for a living — converged on several likely factors.

Scaled content abuse detection finally caught up.

Google has been explicitly targeting "scaled content abuse" since March 2024. The pattern is clear: millions of pages, all AI-generated, published at machine speed, with minimal human oversight. Grokipedia fit every criterion.

The initial indexing may have been a grace period. Google's systems need time to evaluate content at scale. You can index 900,000 pages relatively quickly. Evaluating whether those pages actually deserve to rank takes longer. The algorithms need user signals, quality rater feedback, and pattern analysis to make that determination.

Think of it like a probationary period. Google let Grokipedia in, watched how it performed, collected the data — and then made its call.

Quality rater feedback likely played a role.

Google employs thousands of human quality raters worldwide. They evaluate search results using detailed guidelines — the same guidelines that define E-E-A-T. Grokipedia's content would score poorly on every criterion: no demonstrated expertise, no human experience, questionable source credibility, and factual inaccuracies documented by PolitiFact.

When quality raters consistently flag a domain, that feedback feeds into the algorithms. It doesn't trigger an instant manual action, but it informs the systems that evaluate site-wide quality.

User behaviour told its own story.

After the initial curiosity spike, Grokipedia's daily traffic settled at 30,000 to 50,000 visits. For a site with 6 million articles, that's anaemic. Users weren't returning. They weren't engaging deeply. They weren't bookmarking pages or sharing articles.

Google pays attention to user intent signals. When people click a result and immediately bounce back to the search results, that's a signal. When a massive site gets minimal return visits, that's a signal. When users spend the same amount of time they'd spend on a stub article despite the content being 3,000 words long, that's a signal too.

The cross-platform decline suggests coordinated quality signals.

The simultaneous drop across Google organic, AI Overviews, and ChatGPT is new. We haven't seen this pattern before with other sites. It suggests that quality evaluations are being shared — or at least correlated — across multiple AI discovery platforms.

For anyone building an AI content strategy, this is a meaningful development. Losing Google rankings is one thing. Losing Google, AI Overviews, and ChatGPT recommendations simultaneously is a completely different problem.

The Full Arc in Numbers

Here's the complete timeline in one table:

| Period | Google Clicks/Month | What Was Happening |

|---|---|---|

| Oct 2025 | ~0 | Launch. 885K articles. Curiosity traffic only. |

| Nov 2025 | 19 | Almost invisible in organic search. Indexing underway. |

| Dec 2025 | Rapid growth | Pages ranking. Keywords climbing. Honeymoon phase. |

| Jan 2026 | 3,200,000 | Peak. 6M keywords. 900K+ pages indexed. |

| Feb 6, 2026 | Declining fast | Cliff drop. Glenn Gabe flags the crash. |

| Feb 10, 2026 | Declining further | Wikipedia outranks Grokipedia for its own name. |

Four months. From nothing to everything to cautionary tale.

What This Actually Means for You

Grokipedia isn't just a story about one site. It's a real-time stress test of what Google will and won't accept in 2026. And the answer is more nuanced than "AI content is bad."

Because AI content isn't inherently bad. The problem is what happens when AI content is deployed without the things that make content trustworthy.

Here's what I took away from watching this unfold.

Good Technical SEO Gets You Indexed. It Doesn't Get You Trusted.

Grokipedia nailed the technical fundamentals. Server-side rendering, clean URLs, automated internal linking, fast crawl paths, proper schema markup. All of it worked exactly as intended. 900,000 pages indexed. 6 million keywords captured.

But here's the thing: technical SEO is the floor, not the ceiling. It gets Google to see your content. It doesn't convince Google to trust your content. Those are two completely different things.

If you're investing heavily in technical infrastructure but not in content quality, editorial oversight, or E-E-A-T signals — you're building a house on sand. It might look impressive for a while. But the tide always comes.

Google Gives New Domains a Honeymoon. Don't Confuse It With a Relationship.

The jump from 19 clicks to 3.2 million wasn't because Google evaluated Grokipedia's content and decided it was excellent. It was because Google hadn't finished evaluating it yet.

New domains, especially those with strong technical signals, associations with known entities, and consistent content patterns, can get a temporary ranking boost. Google's quality systems run on different timescales. Crawling and indexing happen in days. Quality evaluation takes weeks or months.

This is something every SEO should understand, because it affects how you interpret early results on new sites. If you launch a new content section and see rapid ranking gains, don't assume it will hold. Wait for the second evaluation wave. If rankings stick after 3-4 months, you're probably in good shape. If they don't — you have a quality problem, not a technical one.

I've seen this pattern with clients too. A site loses visibility that seemed rock-solid, and the root cause is almost always content quality that didn't survive Google's deeper evaluation pass.

Scale Amplifies Everything — Including Your Weaknesses

Publishing 6 million articles in three months is a remarkable technical achievement. But when those articles cite banned sources, reference themselves, and contain factual errors — the scale becomes the problem, not the advantage.

Every low-quality page is another data point telling Google your domain isn't trustworthy. At 100 pages, you might get away with inconsistent quality. At 6 million pages, the pattern becomes undeniable.

This is the lesson for anyone using AI-assisted content at scale. The volume isn't the issue. The volume without quality control is the issue. If you can't review what you're publishing, you can't vouch for it. And if you can't vouch for it, neither can Google.

There are real warning signs when automation starts working against you rather than for you. Grokipedia hit every single one of them.

E-E-A-T Isn't a Suggestion. It's the Whole Game for YMYL Content.

Grokipedia published articles about medical conditions, legal rights, financial decisions, and scientific claims. These are all YMYL topics — the category where Google applies its strictest quality standards.

For these topics, Google's guidelines are explicit: content should be created by people with relevant expertise, experience, or credentials. An AI model — no matter how sophisticated — doesn't have medical training, legal qualifications, or lived experience. It's synthesising patterns from training data.

That doesn't mean you can't use AI for YMYL content. It means the content needs expert oversight, proper attribution, and transparent methodology. "Written by AI" is fine. "Written by AI and reviewed by Dr. Sarah Chen, MD" is better. "Written by AI with no editorial oversight on medical topics" is exactly what got Grokipedia flagged.

Your Sources Are Part of Your Quality Score

This one surprised me, honestly. I expected Grokipedia's crash to be about content quality in general terms. But the citation analysis revealed something more specific.

12,522 low-credibility citations. 1,050 self-referential links. 5.5% of articles citing sources Wikipedia won't touch.

Who you cite matters. Not just for readers — for search engines.

If you're producing content at scale, audit your sources the way you'd audit your backlink profile. Bad outbound citations are the mirror image of bad inbound links. Both signal low quality. Both get noticed.

When we audit sites, source quality is one of the things we check that most people don't think about. It's not just about whether your claims are accurate. It's about whether the sources you point to are trustworthy.

Visibility Is Now Cross-Platform. Losing Google Means Losing Everything.

This is perhaps the most significant takeaway from the Grokipedia crash, and it's something most SEO analysis hasn't fully processed yet.

Grokipedia didn't just lose Google rankings. It lost visibility in AI Overviews, AI Mode, and ChatGPT — all at the same time.

That means quality signals are being shared across discovery platforms. If Google's systems determine your content isn't trustworthy, that assessment appears to propagate to other AI systems that reference web content.

For anyone building a content strategy focused on a single platform, this is a wake-up call. Organic search, AI search, LLM visibility — they're increasingly part of the same quality ecosystem. You can't game one without affecting the others. And you can't lose one without losing the others.

The old SEO playbook was about ranking in Google. The 2026 playbook is about being trusted across every system that surfaces content.

The Honeymoon Period Is Your Testing Window

Here's a practical takeaway that doesn't get enough attention.

Google's honeymoon period for new content — that initial boost before deeper quality evaluation kicks in — is actually a useful diagnostic tool. If you publish content and see an initial ranking boost followed by a decline, that's Google's way of telling you the content didn't pass the second-round evaluation.

Pay attention to the pattern. If your content holds position after 60-90 days, Google's quality systems approved it. If it drops, go back and look at E-E-A-T signals, source quality, and whether the content actually satisfies the search intent better than what's already ranking.

Don't wait for the crash. Use the honeymoon as your early warning system.

The Practitioner's Bottom Line

Grokipedia is the most expensive SEO experiment we've seen in years. It had the backing of a billion-dollar AI company, brand-name recognition, state-of-the-art language models, and flawless technical infrastructure. It still got demoted in four months.

The lesson isn't that AI content doesn't work. The lesson is that AI content without trust signals doesn't work. And the bar for "trust" is higher in 2026 than it's ever been.

If you're using AI to produce content — and honestly, you probably should be — here's the practical checklist:

Have a human review it. Not every piece needs a line-by-line edit. But someone with subject-matter knowledge should verify accuracy, check sources, and flag anything that doesn't pass the smell test. Especially for YMYL topics.

Cite credible sources. If your AI pulls from junk sources, your content inherits that quality score. Review your citations the way you'd review your backlinks.

Don't publish everything at once. Ramp up gradually. Let Google's systems see consistent quality at lower volumes before you scale. 50 solid articles per month beats 5,000 untouched ones.

Build real E-E-A-T signals. Author bios. Editorial standards. Expert contributors. Transparent methodology. These aren't optional extras. They're the difference between content that ranks and content that gets pulled during the next core update.

Monitor quality metrics, not just traffic. Run an audit on your content the way you would on your site's technical health. A honeymoon traffic spike means nothing if the fundamentals aren't there to sustain it.

The sites that will win the AI content era aren't the ones that publish the most. They're the ones that publish content worth trusting — whether a human wrote it, an AI wrote it, or both.

Grokipedia proved that scale without trust is a house of cards. Build yours on something sturdier.

Data sourced from Sistrix, Semrush, SimilarWeb, PolitiFact, Search Engine Roundtable, and independent SEO analysts including Glenn Gabe (GSQi) and Malte Landwehr (Peec.AI).